On this page you'll find all the most common models to use with the webGUI along with some rarer models made by the community. Download links are provided for all models.

Base Models

Base models are versatile AI models that are capable of generating a wide range of styles, characters, objects, and other types of content. Stable Diffusion is a popular base model that has been used to train other models in different styles or to improve overall model performance. These models often have their own VAE (Variable Auto-Encoder) that can be used interchangeably with other models to produce slightly different outputs. Unlike Dreambooth models, base models do not require an activator prompt and can be used in a more flexible way.

Stable Diffusion in particular is trained competely from scratch which is why it has the most interesting and broard models like the text-to-depth and text-to-upscale models.

Stable Diffusion

Stable Diffusion is the primary model that has they trained on a large variety of objects, places, things, art styles, etc. It is the best multi-purpose model.

The latest version of the Stable Diffusion model will be through the StabilityAI website, as it is a paid platform that helps support the continual progress of the model. You can try out the Stablitiy AI's website here.

Version 2.1

Stable Diffusion 2.1 was released on December 8, 2022. In response to the controversial release of 2.0, Stability AI has improved upon their base model and fine-tuned it with a weaker NSFW filter applied to their dataset. This should address many of the criticisms of the previous version and result in more accurate generation of human bodies, celebrities, and other pop culture images. As this is a fine-tuned model, there are no major changes to its functionality, and the main purpose is to correct the mistakes of 2.0.

If these fixes are successful, 2.1 will be an excellent model with higher detail and quality in its outputs, as well as a stronger ability to be trained on specific themes, styles, and objects using techniques such as Dreambooth, Textual Inversion, and Hypernetworks. You can download the 2.1 Stable Diffusion model here (requires a free account).

NOTE: In order to use the 2.1 version you will need to include a .yaml file

and rename it either

For more information, see the announcement post on Reddit.

Version 2.0 - TXT2IMG - DEPTH2IMG - Inpainting - Upscaling models

On 24/11/22 Stable Diffusion version 2.0 was released, you can see the Reddit announcement post here for a brief overview.

2.0 has been trained from scratch meaning it has no relation to previous Stable Diffusion models and incorporates new technology the OpenCLIP text encoder & the LAION-5B dataset with NSFW images filtered out. To most peoples surprise, version 2.0 actually performs relatively worse in general tests of generating images, particularly with artstyles, celebrities and NSFW images. This is a conscious decision by the Stablility AI team for a few reasons and in my opinion would be related to legality issues that have arose from the growing popularity of AI generation.

There are multiple models available with 2.0 each with a different purpose. The most interesting new model is the depth model which is to be used with IMG2IMG and can actually detect depth information within and image and manipulate the image while retaining that depth information. Depth-to-Image cannot be used with txt-to-image. It can be incredibly useful to edit your image without changing or adding/removing elements that aren't consistent with the original image.

One big improvement is the ability to generate images at 512x512 & 768x768. This means you

can generate higher quality images natively with Stable Diffusion without the need of

upscaling or using something like the "high-res fix" on the AUTOMATIC1111 WebGUI. Be sure to

include the

At the time of writing, these new models are not compatible with most UI programs as the core mechanics of the model have changed compared to previous models. But it should only be a matter of time before UI's are updated to support this model.

One drawback of this new model is that it will not work as well with NSFW images as Stablility AI have purposefully tried to filter out NSFW imagery. This shouldn't be a horrible thing for most people and for those that do want NSFW images, it will simply require others to train the model on those images for it to improve at them.

StabilityAI themselves have stated that this model is meant to be used as a base for other models to be trained on. So while the results of version 2.0 are not as amazing as people have hoped for, it opens the possibility of better dreambooth, fine-tuned, textual inversions and other model training methods to produce greater results.

You can download the 2.0 Stable Diffusion model here (requires free account).

Version 1.5 - Inpainting

This model is basically a fork (a branching version) of the Stable Diffusion model that is based of version 1.5 of SD. It has been trained for 440k more steps than the original 1.5 to specialise in inpainting and improving the ability to remove, add and replace objects in a scene. The current version is 1.5 but it should not be confused with the original Stable Diffusion 1.5.

You can download the latest Stable Diffusion model here (requires free account).

Version 1.5

Stable Diffusion 1.5 has been released on 21/10/22. This version has been considered a minor improvement on the previous version with some better txt2img generation.

If you're only looking to generate images, make sure to down load the

You can download the 1.5 Stable Diffusion model here (requires free account).

Version 1.4

Version 1.4 is the model I began my AI journey with so I cannot speak on previous iterations.

You can download the 1.4 Stable Diffusion model here (requires free account).

Waifu Diffusion

Waifu Diffusion is a model trained on over 50 thousand anime related images and is continually being trained with improvements released regularly. It is currently the best model for creating anime characters, but is much weaker at realistic imagery and landscapes.

1.4 Version

Waifu Diffusion 1.4 was released around the start of January 2021. There is not a lot of information I can find about this new version at the current time.

Be sure to include the

You can download the latest Beta model here (requires free account).

1.4 Version Beta

Waifu Diffusion 1.4 is still in very early stages, but they repo is already created so if you want to keep up to date on the latest WD version, you can check this repo and download the beta models as they become available.

As of 03/11/22 there is a ckpt model available on this repository named

You can download the latest Beta model here (requires free account).

Version 1.3.5

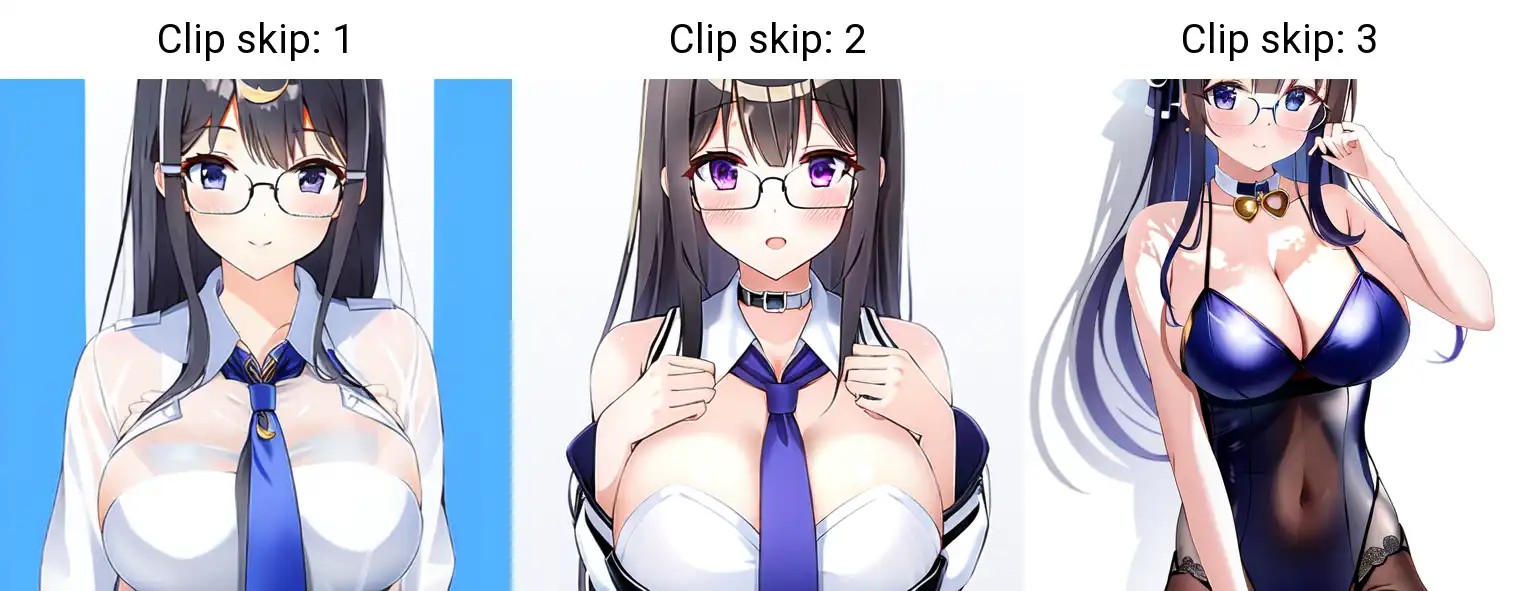

Version 1.3.5 looks to be an experimental alpha version that hakurei has released while they work on version 1.4. As with the beta 1.4 models, it requires CLIP skip for better results from what I have seen in my own testing. I would recommend CLIP SKIP 2 or 3.

You can find the 1.3.5 model in the 1.4 Github repository. Use the same link as above for v1.4.

Version 1.3

Version 1.3 model has greatly improved the consistency and variety of images the Waifu

Diffusion can produce. However, older prompts will obviously create different images even if

you use the same seed, prompts, etc. Because of this, it will take some time to figure out

what new prompt lists you need to create your specific styles. I’d recommend doing a lot of

testing yourself with different prompts, prompt order and other parameters to find what

works with the new model. Another change to version 1.3 is that it relies on ‘comma

separated tags’ more so than previous iterations. So, in the example

You can download the 1.3 Waifu Diffusion model here (requires free account).

Trinart

Trinart Stable Diffusion is another anime-based model. Its results are currently less cohesive compared to Waifu Diffusion, but it can still generate excellent results. It could also give you a unique art style compared to Waifu Diffusion because they trained it on a different dataset.

You can download the latest Trinart model here (requires free account).

Eimis Anime Diffusion

Eimis's Anime model is based on highly detailed anime images and produces images at the same quality as models like Novel AI's or Anything v3. It's a different style to those models and one I personally prefer. If you're going for the classic anime look, this may not be for you, but if you're wanting detailed Artstation-like anime, this is perfect.

There are two Eimis models, one for a strong anime style and one for a more realistic anime style. Both produce high quality images in an artistic style but will not be able to generate photorealistic images.

You can download the latest Eimis Anime model here (requires free account).

Anything Model

Anything 4.0 & 4.5

I'm unsure if this is an official continuation of the Anything model, but according to the huggingface page, this has been popular.

The huggingface files include a 4.5 version. There is no information on how these models have been trained, however it is stated that there is a mix of AbyssOrangeMix2 used with this model.

You can download the latest Anything 4.0 model here (requires free account).

Anything 3.0

Anything 3.0 is a model similar to Waifu Diffusion, but with a more specific anime style. It has gained popularity for its ability to consistently produce high-quality artworks, some of which are on par with the closed-source NovelAI model. However, the distinct style of Anything 3.0 can be limiting, as it only allows for the generation of images in this specific art style, which can become repetitive over time.

You can download the latest Anything 3.0 model here (requires free account).

Honey Diffusion

Honey Diffusion is a realistic anime-style model with a distinctive style characterized by a more desaturated look and limited facial features. It is known for producing consistently good-quality images with less deformity than other models, making it difficult to create a poor image. The model produces good 3D/2D images, has good anatomy, and produces good reflections in glass and mirrors. It is suitable for both SFW and NSFW images, although there is limited information available on how the model was created.

You can download the Honey Diffusion model here

Myne Factory

Myne Factory is an anime model that sets out to differentiate itself from other models. The team behind it aims to create a model that overcomes the shortcomings of other models, particularly the repetitive style and the easy-to-spot look of some anime models. When using Myne Factory, it is recommended that you use Booru style tags when prompting for best results. According to the information page, shorter prompts work better than longer ones.

Version 1.0

Version 1.0 is based on the Waifu Diffusion 1.4 model and has many similarities with that model in terms of usage and outputs. However, there are plans for the next major version to be based on Stable Diffusion. It's important to note that you should set

You can download the Myne Factory model here.

Seek Art MEGA

Seek.art MEGA is a model that has been fine-tuned on Stable Diffusion v1.5 with the goal of improving the overall quality of images while maintaining the flexibility of Stable Diffusion. It was trained on 10,000 high-quality public domain digital artworks, which is beneficial in the current state of copyright and other issues faced by AI generation. It is recommended to generate images at a size above 640px for optimal results.

The creator of Seek.art MEGA has indicated that they will update the model once tools are available to fine-tune based on Stable Diffusion v2.0.

Please note that the creator of this model does not allow the commercial use of this model without express written permission. Use at your own risk.

You can download the latest Seek Art MEGA model here (requires free account).

F222

F222 is a machine learning model based on SD 1.5 by Zeipher AI. It has been trained on a collection of NSFW (not safe for work) photography and other photographic images, which makes it particularly good at generating nude or semi-nude persons. However, it can also generate clothed individuals with fewer deformities. At the time of writing, I have not been able to find much information on this model, and the creator's website is currently offline. Overall, it seems that F222 is simply an improved version of SD 1.5 with better support for NSFW images.

You can download the F222 model here.

Hentai Diffusion

A model based on Waifu Diffusion 1.2 and trained on 150k images from R34 and gelbooru. As the name suggests, their focus is on hentai related images and improving hands, obscure poses and general consistency of the model.

Adding the prompt

Update - 09/02/23

I am unsure of when this update officially happened, but Hentai Diffusion has moved over to an official website that is more sleek and easy to navigate. It includes

The new website provides more details on how to get started using the model, provides tips on how to use the model and also provides extra embeddings and style guides. Overall it is a nice change and with it seems to be a new HD-22 model.

The Cognitionai team have also provide a negative prompt list that they recommend using with the HD-22 model.

View negative prompt

Visit the official website at https://www.cognitionai.org/hdhowtogetstarted

Update - 12/10/22

The newest update to Hentai Diffusion has improved coherency of image sequences for better animation, more variety in obscure camera angles and better photo to anime conversion. I am yet to test this new model, but If it is anything like the jump Waifu Diffusion made between v1.2 and v1.3, it should be substantial.

You can check the old Huggingface page here (requires free account).

Grapefruit

Grapefruit is a model that is primarily used for generating hentai content, but it can also produce solid SFW (safe for work) content. There have been several iterations of this model, and as of writing, it is up to version 4, which includes a separate inpainting model. Grapefruit aims to have a bright and softer anime art style while also providing more lewd and kinky types of poses, imagery, clothing, and more.

It's worth noting that this model is simply a merger of other models. However, due to its popularity and good image quality, it's worth mentioning in this list.

For best results, it is recommended to use Clip Skip 1 to 4, avoid using face restore, and avoid using underscores in your prompts.

You can download the Grapefruit model here.

Lewd Diffusion

A model based on Waifu Diffusion 1.2 and trained on 70k explicit images from Danbooru. They have trained the current version for 2 epochs. As the only link to this model is via a torrent, I have self hosted the file. This means it may not always be the most up-to-date model. If you would like to download via torrent, the URL is:

R34 Diffusion

A model trained on images from the site rule34.xxx with the latest model being trained with 150k

images for 5 epoch. It has a lot of variety in its lewd images and, without specifying a style,

will create more realistic/ 3D model looking characters. If you’re trying to get a specific

artist’s style, be sure to prefix it with

You can download the latest R34 Diffusion model here.

Zack3D Kinky Diffusion

A model based on Stable Diffusion that specializes in furry art and also more kinky art. But it will use a furry character as the subject. E621 tags are used with underscores for multi-word tags.

You can download the latest Zach3D Kinky Diffusion model here.

Furry Diffusion

A model trained on 300k images from e621. As the name suggests, this will only create furry art, so if you're looking for that, you've come to the right model.

There have been more versions of this model released available at the link below however I do not know a lot of information about them. Some also look to require a torrent download.

You can download the latest Furry Diffusion model here.

Yiffy Diffusion

Yiffy is yet another furry model. They have trained the most current model on 210k images from

e621 for over 6 epochs (I’m unable to get a definitive number). One important note when using

this model is that if you want to use the prompt

You can download the latest Yiffy Diffusion model here.

Dreambooth Models

Dreambooth models are specialized versions of larger base models like Stable Diffusion that have been fine-tuned to produce a specific style or character in images. There are many Dreambooth models available, but I will only mention those that are particularly notable or effective. One unique aspect of Dreambooth models is that they require an "activator prompt" to activate the trained style. Without this prompt, the model will behave like the original base model it was trained on.

Analog Diffusion

Analog Diffusion is a model trained from Stable Diffusion v1.5 and features a retro, analog style

photography that can produce very realistic, well-lit imagery. It does take a bit of playing

around with prompts to get good results, but if you use

Analog Diffusion also works very well with the hi-res fix feature of the webGUI and can create great large portrait and landscape images. As wth all Dreambooth models, be sure to use the activator prompt and I would recommend putting the prompt at the front of the list for greatest effect.

A neat tip I found online to add more realism to Analog Diffusion images is to add a grainy photography filter on top of the images. This will greatly improve realism and add the final touch.

Open Journey Diffusion

Midjourney is a popular AI image generator known for its detailed and realistic images. Unlike the open model of Stable Diffusion, there is no open version of Midjourney available. This is where the Dreambooth model "Open Journey" comes in. Open Journey is a model that has been trained on a large collection of images generated by Midjourney, with the goal of allowing the Stable Diffusion v1.5 model to produce results that are closer to the Midjourney style.

Studio Ghibli Diffusion

A model posted on Reddit that was trained by u/IShallRisEAgain that has been trained on roughly 20k images using the Studio Ghibli style. The model is based on Waifu Diffusion 1.3. Click here for the original Reddit post. As this model is small and made by one enthusist, it may not be updated often and may even be unavailable at some point. If this occurs, I will self host the file.

You can download the latest Studio Ghibli Diffusion model here (requires free account.)

Dungeons and Diffusion

A model, created by Reddit user u/FaelonAssere, has been trained to generate Dungeons and Dragons-inspired artwork. Although it is unclear which base model was used, the model was trained on approximately 2,500 images related to D&D races, with a focus on human characters.

Click here for the original Reddit post.

You can download the latest Dungeons and Diffusion model here (requires free account.)

Counterfeit

I came across this model on civitai.com, but there was little information provided about it. Despite this, the example images appear to be of high quality, similar to the Anything model. It is not clear how this model was trained or what the base model was. While it is capable of generating male characters, it seems to have a focus on generating female characters, particularly in the form of wallpaper-style anime girls.

You can download the latest Counterfeit model here

ConceptStream

ConceptStream is a diffusion model that is currently in development. The team behind the project is committed to making it open-source, with the aim of creating a model that enables "continuous storytelling." This refers to the ability to create consistent characters across different scenes, poses, and lighting conditions. While it is an ambitious goal, the team has released an alpha version of the model, which can be downloaded via this Reddit post.

Model Mixes

Model mixes are models that are simply a mix of different models. The really good mixes are usually a mix of 4 or more different models. Anyone is able to make their own mixes, so there are tens of hundreds available. This page will only list ones that are really notable or popular.

Orange Mixes

Abyss Orange Mix does not seem to be a trained model, instead it is a collection of merged models that can create high quality anime style art. As these are only model mixes, there are a large selection to choose from. The Huggingface page includes merge recipes to help you create the Orange mix from scratch.

If you want more NSFW work images, it's recommended to add

For each version of the model, they release different varients that offer different art styles.

You can download the latest Abyss Orange Mix model here (requires free account).

Character Specific Models

Jinx from League of Legends - Model by Reddit user u/jinofcool

Another model posted on Reddit that was trained by u/jinofcool that has been trained to create simplistic app icon style images. It was trained using Dreambooth. The author has not provided much information on the model but it is free to download and use. Click here for the original Reddit post with download in the comments.. As this model is small and made by one enthusist, it may not be updated often and may even be unavailable at some point. If this occurs, I will self host the file.